Data Preprocessing

Introduction

In the world of machine learning, raw data is never quite ready for modeling straight out of the box. It’s often messy, inconsistent, and full of irrelevant information. This is where data preprocessing comes into play. In simple terms, data preprocessing refers to the steps taken to clean, organize, and prepare raw data into a structured form suitable for machine learning algorithms.

In this blog, we will walk through the key concepts and steps involved in data preprocessing, from handling missing values to scaling features, and why each of these steps is essential to improve the performance of machine learning models.

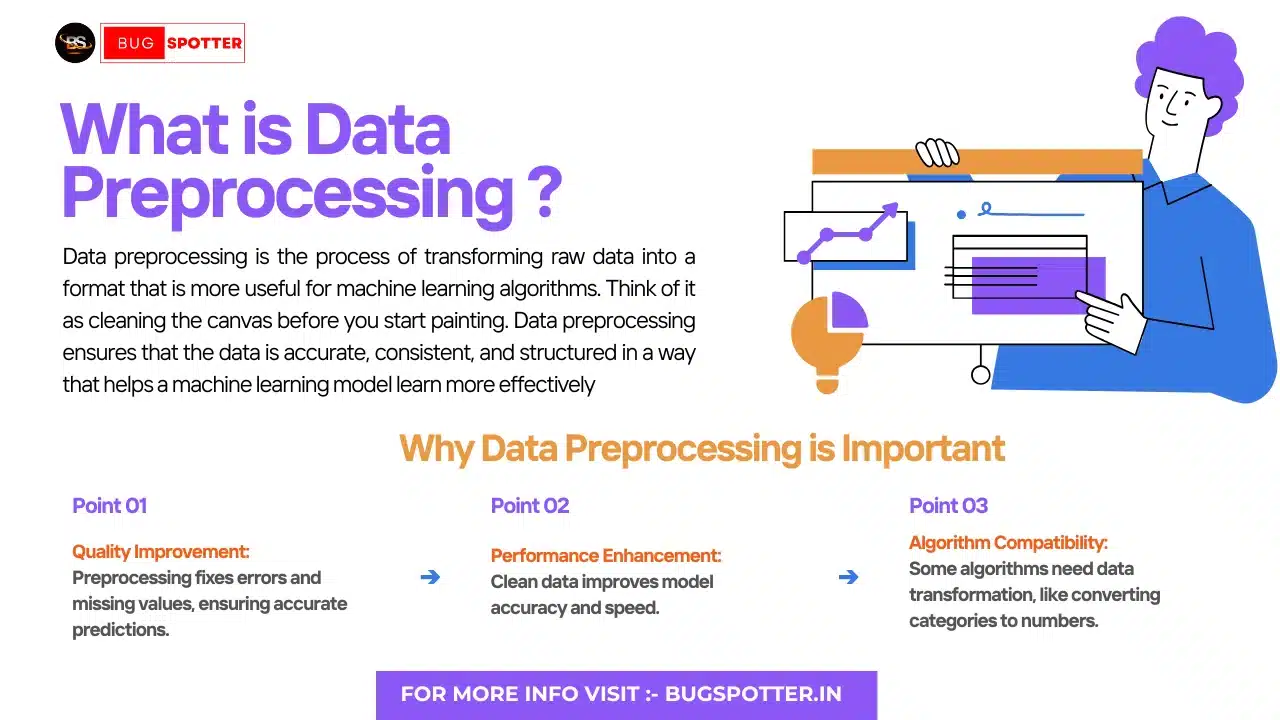

What is Data Preprocessing ?

Data preprocessing is the process of transforming raw data into a format that is more useful for machine learning algorithms. Think of it as cleaning the canvas before you start painting. Data preprocessing ensures that the data is accurate, consistent, and structured in a way that helps a machine learning model learn more effectively.

Why is Data Preprocessing Important?

- Quality Improvement: Raw data often contains errors, inconsistencies, and missing values that can lead to inaccurate predictions.

- Performance Enhancement: Properly preprocessed data can improve the performance of models, making them more accurate and faster.

- Algorithm Compatibility: Different algorithms require different kinds of data. For instance, some algorithms may not work well with categorical data unless it is transformed into numerical data.

Data Preprocessing in Data Mining vs. Machine Learning

Data preprocessing plays a crucial role not just in machine learning, but also in data mining, which focuses on discovering patterns in large datasets. While both fields share common preprocessing techniques, they differ in their goals:

- Data Mining: The focus is on discovering unknown patterns or relationships in the data, so preprocessing involves preparing data for exploratory analysis and pattern discovery.

- Machine Learning: The goal is to train predictive models, which means preprocessing is more focused on structuring data to optimize model performance.

Despite these differences, the core preprocessing techniques are quite similar in both fields and involve tasks like cleaning, transformation, and normalization.

Steps in Data Preprocessing

1. Data Cleaning

The first step in data preprocessing is cleaning the data. This includes removing noise, fixing errors, and handling missing values.

Handling Missing Data: Missing values can arise due to incomplete data or errors during data collection. Common techniques to handle missing data include:

- Imputation: Replace missing values with the mean, median, or mode.

- Deletion: Remove rows or columns with missing values.

- Forward/Backward Fill: For time-series data, missing values can be filled with the previous or next available value.

Removing Duplicates: Duplicate entries can skew results. Identifying and removing duplicates ensures the dataset is accurate.

Fixing Errors: Often, data entries might be incorrect due to human error or system faults (e.g., negative values in age columns). Identifying and correcting these errors is crucial for accuracy.

2. Data Transformation

Once the data is cleaned, the next step is transformation, which involves converting data into a format that can be efficiently processed by machine learning algorithms.

Normalization/Scaling: Many machine learning algorithms (like k-nearest neighbors and gradient descent) are sensitive to the scale of the data. Normalization ensures that all features are on the same scale, typically between 0 and 1.

- Min-Max Scaling: Rescales features to lie within a given range.

- Standardization (Z-Score Scaling): Rescales features by removing the mean and scaling to unit variance, useful when the features follow a Gaussian distribution.

Encoding Categorical Data: Many machine learning algorithms require numerical data, so categorical data must be transformed into numbers.

- Label Encoding: Converts categories to numeric labels.

- One-Hot Encoding: Converts categorical values into a binary matrix, creating a new column for each category.

3. Feature Engineering

Feature engineering involves creating new features or modifying existing features to improve the model’s predictive power.

Creating New Features: Sometimes, raw data may not capture enough complexity. By combining or transforming existing features, you can create new ones that provide additional insights.

- Polynomial Features: Creating higher-degree polynomial features can help capture more complex relationships between variables.

Feature Selection: Selecting the most relevant features can improve model efficiency by reducing overfitting and computational cost. Methods like Variance Threshold, Recursive Feature Elimination (RFE), and Random Forests can help identify important features.

4. Data Splitting

Before training a machine learning model, it’s important to split the data into two sets: one for training the model and one for testing it. This helps evaluate the model’s performance on unseen data.

- Training Set: Used to train the model.

- Test Set: Used to evaluate the model’s performance after training.

- Validation Set: Sometimes, a separate validation set is used for hyperparameter tuning to ensure that the model generalizes well.

The typical ratio for splitting is 80% training data and 20% testing data. For more complex models, a 70/30 or even 60/40 split might be used.

5. Data Augmentation (Optional)

Data augmentation is particularly useful in cases where you have limited data, such as image classification tasks. It involves artificially increasing the size of your dataset by applying random transformations (e.g., rotating, flipping, or cropping images) to create new data points from existing ones.

Data Preprocessing Techniques

Data preprocessing techniques are crucial to preparing raw data for both machine learning and data mining applications. Some common preprocessing techniques include:

- Handling Missing Data: As discussed earlier, imputation or deletion methods are essential for dealing with missing values.

- Outlier Detection and Treatment: Identifying and handling outliers ensures that extreme values do not distort model performance.

- Data Normalization and Standardization: Ensuring all features are scaled appropriately to avoid bias in model training.

- Feature Engineering and Selection: Creating and selecting features that improve the predictive power of models.

Tools and Libraries for Data Preprocessing

Fortunately, there are many tools and libraries available to simplify data preprocessing. Some popular ones include:

- Pandas: A powerful Python library for data manipulation and analysis, particularly useful for handling missing data, duplicates, and basic transformations.

- Scikit-learn: Offers many preprocessing tools like scaling, encoding, and feature selection techniques.

- NumPy: Ideal for numerical data manipulation.

- TensorFlow/Keras: In deep learning, these frameworks provide built-in methods for preprocessing image, text, and time-series data.

Latest Posts

- All Posts

- Software Testing

- Uncategorized

Categories

- Artificial Intelligence (5)

- Best IT Training Institute Pune (9)

- Cloud (2)

- Data Analyst (55)

- Data Analyst Pro (15)

- data engineer (18)

- Data Science (104)

- Data Science Pro (20)

- Data Science Questions (6)

- Digital Marketing (4)

- Full Stack Development (7)

- Hiring News (41)

- HR (3)

- Jobs (3)

- News (1)

- Placements (2)

- SAM (4)

- Software Testing (70)

- Software Testing Pro (8)

- Uncategorized (33)

- Update (33)

Tags

- Artificial Intelligence (5)

- Best IT Training Institute Pune (9)

- Cloud (2)

- Data Analyst (55)

- Data Analyst Pro (15)

- data engineer (18)

- Data Science (104)

- Data Science Pro (20)

- Data Science Questions (6)

- Digital Marketing (4)

- Full Stack Development (7)

- Hiring News (41)

- HR (3)

- Jobs (3)

- News (1)

- Placements (2)

- SAM (4)

- Software Testing (70)

- Software Testing Pro (8)

- Uncategorized (33)

- Update (33)